CONTENTS

CLICK THE LINKS BELOW

The Seed of Life (SoL ~ so-ul)

-

Ancestral Dance: Mangrove Forest Wetland Soundscape

-

The Travellers: Freshwater Lake Wetland Soundscape

-

The Black Forest: World-Wide-Forest Soundscape

-

Shelter and Rest: Cave Limestone Soundscape

-

Harvesting the Spirit of the Rice Paddy: Rice Paddy Field Soundscape

The Shadow

-

Tingkap Nada: Live Location Audio Streamer Installation

-

Sfera Nada: Off-grid Live Location Audio Streamer Device

-

Gerak Nada: Interactive 3-D Motion Sound Event

Miroirs of Malay Rebab

-

Gajah Menangis

-

Menghadap

-

Enchanted Rain

-

Nu

-

San

The Shadow

Tingkap Nada: Live Audio Streamer Installation

Part 1: Selecting the Beans

Keywords: Audio streaming, server, interface, client, Locus Sonus

Audio streaming has emerged since the 1990s, as an alternative platform for musicians to engage and communicate with their audience digitally. Streaming is done over the interconnected global computer-based network, the internet which includes a method of transmitting or receiving data especially video and audio material through networks and devices as a steady, continuous flow, allowing playback to start while the rest of the data is still being received, also known as buffering (Lee, J. 2005). It has become a new norm, especially during the Covid-19 pandemic social-movement restrictions, in which music organisers, music venues, and musicians including myself, have been highly affected by the shut down of the physical social engagement based events. However, according to Forbes, internet usage had amplified by 70% and it includes streaming by 12% during the Covid-19 pandemic. As from then, computer engineers, web developers, music educators, and musicians, to name a few have been collaborating to improve the musical experience and engagement of cyber communities through the virtual 'world' with more realistic, real-time, and efficient interaction. This has drawn my interest in exploring in-depth the possibilities of using the audio streaming platform for creating and disseminating my artistic research outputs.

Tingkap Nada, which literally means ‘voice’ or ‘tone’ from the window, is the first series of an interactive sound art artistic research project, The Shadow Project, which will be materliased by applying the Locus Sonus (from Latin, Location Sound) streaming platform. The goal of this artistic research project are; 1) to explore audio internet streaming platforms for sound art creation and community interaction; and 2) to establish a sustainable global platform for disseminating natural-culture, Nada Bumi soundscape, and sound art to the community. Hence, Tingkap Nada will consist of two-match pair devices that will be installed in two different natural landscapes with unpredictable weather conditions and other natural and human obstacles and operated for all the time. The installations are used to 'exchange' the live audio stream of the soundscape between the two natural cultural and heritage sites in Malaysia and the other part of the world (Figure 1.1) and perceived by the listeners via the installations or Locus Sonus Soundmap web. Although there have been various similar project conducted by several sound artist and acoustic ecologist through Locus Sonus platform such as Grégoire Lauvin's split soundscape, and Locus Stream Tuner Locus Stream Promenade, just to mention a few, this research output is a unique opportunity during Covid-19 pandemic especially for Malaysian community to appreciate home-grown natural-culture soundscape and sound art in 'engaging' ways, which will be explored further during the development of the sound installation.

Using and or participating in the stream project implies questioning ‘traditional’ listening and compositional practices where audio content is pre-determined. It raises questions regarding ‘real-time’ and ‘real-space’ as well as continuity and mobility that are reflected overall in the corpus of artistic creations. (Locustream, 2018)

As stated on the Locus Sonus website, Locus Sonus is a France-based collective research group, whose main aim is to explore the ever-evolving relationship between sound, place, and usage since 2005. Locus Sonus is integrated into Ecole Superieur d'Art d'Aix and Laboratoire PRISM and is funded by the Ministère de la Culture Française. Since then, Locus Sonus has established a global collaborative artistic research network involving sound artists, composers, ecologists, audio engineers, computer scientists, and more with its open-source collective learning platforms such as Acoustic Common Creative Technical Workshop and fundamental research project output, Locus Sonus Soundmap from Locustream Project. Locus Sonus Soundmap is an interactive web-based geo-tagging live audio record-streaming through a live audio stream recorder-encoder application, Darkice, and streaming media server, IceCast (Locus Sonus, About, 2020). Stéphane Cousot (Acoustic Common Creative Technical Workshop #2, January 2021) had summarised the mechanism of the Locus Sonus Soundmap as the following:

ok. It's very "simple" in fact, with a very efficient bin (Darkice @ http://darkice.org/), the

audio data are encoded and sent through WiFI or 3G/4G connection to our server (Icecast - https://www.icecast.org/), then the sound map scripts play the selected source from that server. There is two separated side, the sources and the listeners, no radio. (Stéphane Cousot, 2021).

Darkice is an open-source live audio streamer application, whereby it records audio inputs from an audio recorder-playback hardware interface such as a computer or a smartphone, encodes it into an audio streaming data format such as Ogg Vorbis or MP3, just to list a few, and sends it to a dedicated streaming server over the internet network (Diniz, R. et al., 2016). Locus Sonus Soundmap uses the Icecast server, which is one of the open-source server technologies for streaming media. Maintained by the Xiph.org Foundation, it streams Ogg Vorbis or Theora as well as MP3 and AAC format via the SHOUTcast protocol (MDN Web Docs, 2021).

It is observed that there are three types of audio recorder-playback interface integrated with Darkice, innovated by Locus Sonus Open Microphone Project for Locus Sonus Soundmap which are; 1) smartphone audio recorder-playback with Locus Sonus mobile apps, the LocusCast; 2) mobile or installed microcomputer audio recorder-playback with Locus Sonus Raspberry Pi Programme which is known as the Locus Sonus Streambox; and 3) Computer-based audio recorder-playback with Pure Data open-source software through Locus Sonus Soundmap Pure Data patch. The encoded live audio recorded from Darkice is then transmitted to Locus Sonus Soundmap IceCast Server for three client platforms which are;

1) Locus Sonus Soundmap website https://locusonus.org/soundmap/051/ ;

2) Locus Sonus Soundmap audio stream weblink http://locusonus.org/soundmap/server/and;

3) Locus Sonus Pure Data Patch https://locusonus.org/locustream/#pd .

The Open Microphone hardware interfaces enable any individuals to set up their own live audio record-streaming session and can be administered using The Locus Sonus Soundmap Administration user’s server account application:

https://locusonus.org/soundmap/dev/admin/login/,

which allows the user to control the streaming parameters and details such as time zone, streaming identification, and audio format, just to name a few. On the other hand, the Locus Sonus user’s Streambox operation parameters can be configured by login the Locus Sonus user’s Streambox server with a web browser, https://streambox.local/ or Secure Shell (SHH) command-line ssh @streambox.local via identical Local Area Network (LAN) through internet sharing enable over ethernet cable connected between the user’s Streambox and user’s computer or remotely through Wide Area Network (WAN) over Locus Sonus Streambox’s Wireless Fidelity (Wi-Fi) https://streambox.<streambox_IP_address>/ or ssh @streambox.<streambox_IP_address> (Sinclair, P. 2018). However, SSH via a different network communication platform (internet service provider- ISP) for remote off-grid communication setup will be explored towards the end of this project development.

The Locus Sonus Streambox uses Raspberry Pi, an open-source programmable microcomputer, which is widely used for its small size, cost-effective, and energy-efficient with similar basic desktop computer processing performance and has integrated live audio streaming system with open-source Locus Sonus Soundmap server and collective learning community. Therefore, Locus Sonus Streambox hardware interface and Locus Sonus Soundmap server would be the ideal choice to start with in developing this sound installation. However, the Tingkap Nada - Locus Sonus Streambox will need to be modified and self-sustained in order to suit the off-grid environment with unpredictable weather conditions and other natural and human obstacles. There are other 'similar' standards off-grid portable audio record-playback hardware interfaces for remote natural soundscape recording except, without live audio streaming capability and not open-source such as from Wildlife Acoustics https://www.wildlifeacoustics.com/ and Open Acoustic Device; AudioMoth https://www.openacousticdevices.info/audiomoth, just to list a few, from such hardware interfaces will be useful to be studied comparatively with Locus Sonus Streambox for the modification process needed on Tingkap Nada. The Locus Sonus Streambox blueprint and its modification needed will be investigated further along with other related interfaces in Part 2 below.

Figure 1.1. Tingkap Nada basic network mapping draft

Part 2: Roasting and Grounding

Keywords: Streambox, Digital Signal Processing, transducer, efficiency, durability, mobility, maintenance

In relation to Tingkap Nada project goals, the fundamental design and build of the Tingkap Nada will be based on the ideal installation operational capacity of which are; 1) high-quality audio record-playback stream; 2) self-powered for all time; 3) portable size; 4) durable; and 5) cost-effective. The Tingkap Nada’s artistic visual concept exterior will be a replication of window architecture design and aesthetic that ‘visually’ represents the soundscape site's historical culture and this will be discussed in detail towards the end of this study.

Locus Sonus Streambox is an open-source self-assembled hardware interface made up of a Raspberry Pi microcomputer with a Locus Sonus streaming operating system (OS) installed on a Raspberry Pi micro Secure Digital (SD) card which can be downloaded at no cost; https://locusonus.org/streambox/. The Raspberry Pi microcomputer served as the main audio streaming processor and the operating system script can be modified further for additional computing tasks. Locus Sonus stated that any Raspberry Pi model family A, B, and Zero which compatible with the Locus Sonus Streambox operating system design architecture, can be used for the Locus Sonus Streambox. The Raspberry Pi has several models with different features as described by Pi Hut in the following model comparison table link;

https://thepihut.com/blogs/raspberry-pi-roundup/raspberry-pi-comparison-table.

The main features that are needed to be considered in selecting the Raspberry Pi to be used with the Locus Sonus Streambox for an off-grid environment are as follows; 1) the microcomputer processing capacity which includes the number of Central Processing Unit (CPU) cores, CPU clock speed, and Random Access Memory (RAM) size to operate the Locus Sonus audio streaming operating system, 2) the number of available powered Universal Serial Bus (USB) to connect with the additional interface for higher audio streaming quality; 3) the type of available internet network connection to broadcast the live audio streaming 4) the microcomputer total power consumption to estimate the power needed and provide powering options for off-grid operation environment, and 5) the reasonable cost of the microcomputer and everything else to get this project off the ground. Following this, a comparison table of up to date Raspberry Pi family Model A, Model B, and Zero is made in table 2.1. Raspberry Pi 3 Model A+ and Pi 3 Model B+ have a similar average microcomputer processing capacity; four CPU cores at 1.4 GHz clock speed for each core and 512 MB to 1000 MB of RAM. While Raspberry Pi Zero Wireless has only one CPU core at slightly one decimal of 0.4 GHz difference with similar RAM size of Raspberry Pi 3 Model A+. Nevertheless, microcomputer processing does require high power consumption for extra CPU cores, slightly higher clock speed, and additional RAM size.

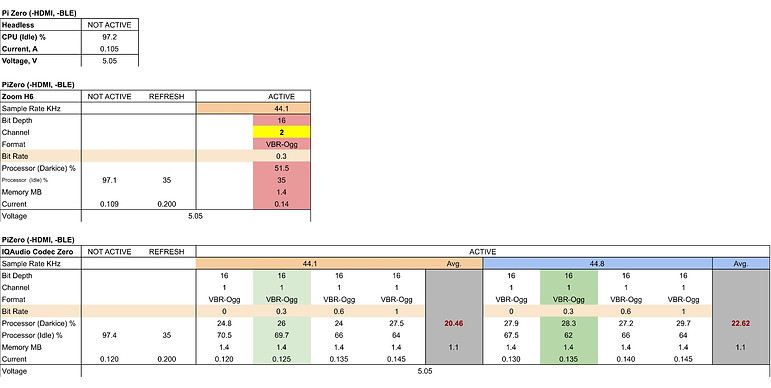

Table 2.1. Latest Raspberry Pi model version specification comparison

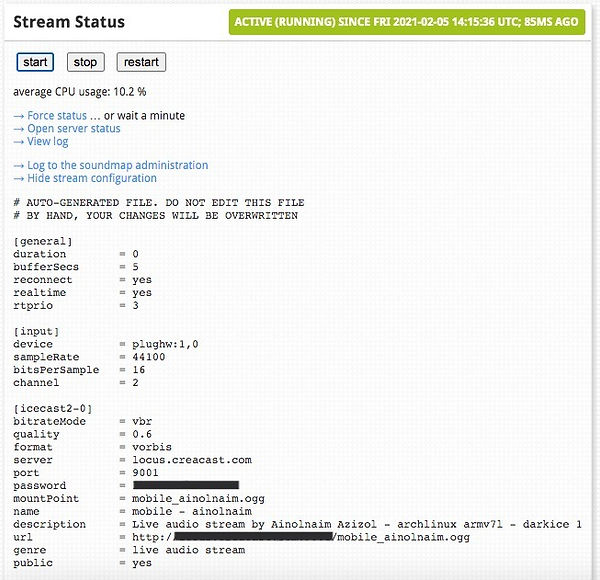

Powering the Locus Sonus Streambox in an off-grid environment while making it self sustained around the clock, lightweight and small size, would require the hardware interface to be operated at a small power consumption scale and equipped with rechargeable power supply from free renewable energy sources without limiting the audio record-playback streaming quality. Hence, the CPU usage and power consumptions of Raspberry Pi 3B+ and Zero are measured in order to identify the optimum microcomputer processing Raspberry Pi model with the lowest power consumption while maintaining high-quality audio streaming. Following this, both Raspberry Pi are set up with matched high audio streaming process settings and a battery-powered studio-quality sound card such as Zoom H6. Then, the CPU usages are monitored during active live audio streaming via two methods for cross-reference in which are; 1) SSH command line for Nmon Linux monitoring tools or simply with a command line: top ; and 2) Locus Sonus Streambox User's Administration web application through ethernet or wireless (Figure 2.1 and 2.2).

On the other hand, the power consumption of both Raspberry Pi are measured without bypassing the input filter capacitors, TVS surge suppression diodes, and polyfuse on the microcomputer board by using either two methods in which are; 1) the microcomputer board is supplied with a constant 5 Volts 2.5A Power Supply Unit (PSU) through the GPIO pins as indicated in the GPIO chart here: https://www.raspberrypi.org/documentation/usage/gpio/ and a multimeter for voltage and current readings are connected to the Raspberry Pi's GPIO power input pins indicated as 5 Volts and Ground; and 2) the microcomputer board USB power input is supplied with a PSU, and a multimeter for voltage readings is connected in parallel circuit to standard Raspberry Pi microcomputer board test points indicated behind the microcomputer board as PP1 or PP2 5 volts and PP5 Ground which are usually located near the USB power input port while for the current readings is connected in serial circuit between the power source and the microcomputer (figure 2.3)(Patrick, D. R., & Fardo, S. W. 2008).

Figure 2.1. Locus Sonus Streambox User's Administration Website

Figure 2.2. Locus Sonus Streambox User's Administration SSH command line in Terminal

Based on the Ohm's law

V = I(R)

P = V(I)

Series Circuit

V total = Sum(V)

R total = Sum(R)

I total = Ia = Ib = Ic

whereby,

Power (P) in Watt

Voltage (V) in Volt

Current (A) in Ampre

Load resistance (R) in Ohm

Figure 2.4 Power and CPU consumption monitoring on Pi Zero Wireless (Bluetooth (BLE) and HDMI disabled) with Zoom H6 (Zoom stereo XY microphone) and IQAudio Codec Zero (onboard mono MEMS microphone) sound card.

A studio condenser (capacitor) microphone requires additional voltage to activate the microphone transducer (capacitor charge-discharge) and to pre-amplify (pre-gain) the 'small' voltage difference signal as resulted from the microphone capacitance variations between a vibrating membrane (positively charged) and backplate (negatively charged) into the line-signal level (Rumsey, F., & McCormick, T. 2014). This additional voltage is known as the Phantom power at 9 to 48 volts (V) and 50 to 10 milliampere (mA) or less. The external sound card is usually USB powered at a typical 5V and 500mA power supply, which converts the input power voltage into phantom power voltage according to Ohm's law; input power is equal to output power (Pin = Pout) (Patrick, D. R., & Fardo, S. W. 2008). A condenser (capacitor) microphone with USB powered sound card consumes much higher power at an hour rate (Watt/hour) compare to a permanently charged (electrostatic) diaphragm and build in FET pre-amp microphone transducer; Electret condenser microphone, which only requires additional voltage for FET pre-amp at 1.5 to 2.5 V and 10mA or less. Therefore, a studio-quality Electret condenser microphone with a wide dynamic range, low self-noise, full sound spectrum, and flat-frequency response will be used for the off-grid audio streaming installation unit(s); Primo EM272 or PUI Audio AOM-5024L-HD-R. Although both microphones theoretically were sharing the same specifications (except frequency response), it is observed that the Primo EM272 produces a more transparent, and 'liver' audio tone color compare to the AOM-5024L-HD-R, which has a slightly more bass tone color (Doug Wollman, 2020). However, the Primo EM272 is much more costly and theoretically, the output of the AOM-5024L-HD-R microphone can be EQ-ed via Raspberry Pi Alsamixer to achieve similar tone quality output as Primo EM272.

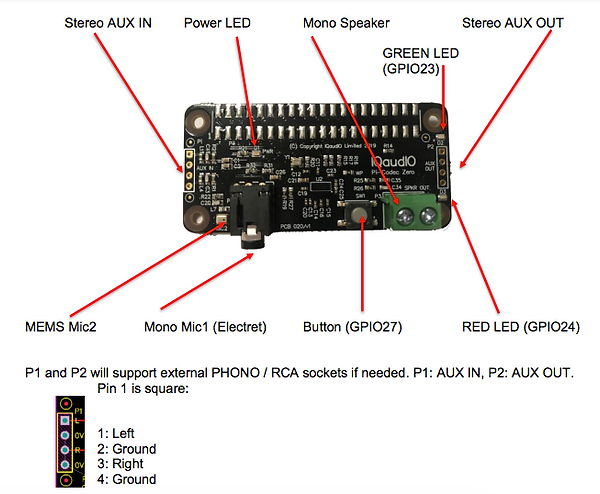

A Pi's Hardware Attached on-Top (Pi-HAT) sound card, IQaudio Codec Zero for both Pi Zero Wireless and Pi3 B+, is used to pre-gain the external stereo electret condenser microphone and convert the analog signals (voltage variants) into digital signals (binary data). The IQaudio Codec Zero is able to sampled and encoded the microphone signals at 44.1to 96 kHz sampling rate and 16 to 24 bit-depth. The IQaudio Codec Zero is a very flexible small-sized Pi-HAT (similar size with Pi-Zero) available on the current market (2021) with the following input-output specs; a stereo in auxiliary (for stereo microphone), a stereo out auxiliary (for stereo speaker), build in single (mono) omnidirectional MEMS microphone with switchable input from a build-in 3.5mm computer microphone input jack size) and two stereo speaker outputs, which very suitable for the off-grid audio streaming (record-playback) installation unit(s) basic input-output requirements, Tingkap Nada; stereo speakers audio playback and stereo microphone audio recording (IQaudio Limited. 2020). The IQaudio Codec Zero is installed according to the IQaudio Product Guide manuals (Version 32) and the audio parameters are controlled via Raspberry Pi inbuild audio mixer program, Alsamixer via SSH over the wireless internet (Figure 2.4) and pilot streaming is done via HAT's on-board MEMS microphone (Audio 2.1).

Figure 2.4. IQAudio Codec Zero sound card control parameters with default Raspberry Pi alsamixer program.

Audio 2.1. Pilot live audio streaming via IQAudio Codec Zero sound card and Pi Zero W.

The IQAudio Codec Zero sound card needs to be configured to enable sound recording and playback on the system. This is done by activating several input-output audio channels of the IQAudio Codec Zero sound card via the raspberry Pi Alsamixer (Figure 2.4). To execute this, input a command line, alsamixer into the SSH terminal window, then select the designated sound card (IQAudio Codec) in the Alsamixer terminal window and activate the input-output audio channels by changing the [MM] (mute state) to [00] or [>gained values<] of each following input-output audio channel strip; [Mic 1], [Mic 2], [Aux], [Mixin >all<], [Mixin PG], [ADC] and [ADC GAIN], which the [ADC] gain level is set at 90%, while the rest gain levels are at 70%. However, the gain level can be adjusted further according to the recording quality required, and the settings made can be stored in the system with a command line, sudo alsactl store.

However, it is observed that a 2.7V+ plug-in power (bias) from the iQAudio Codec Zero Mic 1 input can be used to power up the Primo EM272 FET at 0.5 mA. This is done in iQAudio Codec Zero Alsamixer by selecting the Mic 1 as [P-Mic] and the Mic 2 as [N-Mic]. Due to the single Voltage (+) potential difference output towards the ground (-); Tip - Sleeve, only one microphone can be used as mono. Hence, a binaural or stereo microphone array is only possible to be used by using two Voltage potential difference outputs towards the ground; tip-ring-sleeve for the iQAudio Codec Zero Aux stereo input in this system which will be discussed further for the Sfera Nada project application.

A commercial Lithium-ion battery power bank with 5 Volt at 2 Ampere discharge output and build-in charge-discharge protection circuit with circuit noise filters is used as the main power source for the Tingkap Nada due to its low cost, high accessibility, easy maintenance, well mobilise, far safer, and better performance stability compare to a DIY power bank with Sealed Lead Acid (SLA) battery or Lithium-polymer battery or nickel-metal hydride battery. Following this, a solar panel with a dimension of 170mm width and 230mm height, theoretically producing 18 Volts and 15 Watts output is used to charge a 10Ah power bank via USB fast charging module at 5 Volt and 3 Ampere output. In real-life testing, the solar panel produces 18V at 10W (direct output) from direct sunlight during a bright sunny summer day and took 4 hours to charge the commercial 10Ah power bank from 70% to 100% at 5V, 3A charging input (note that, a fast-charging USB cable rated at 3A is needed). The solar panel was installed into a 3-D printed X frame made from PTEG filament for weather resistance and durability. The solar panel set can be mounted on an M10 or M6 screw tripod stand or microphone stand and hooked, strapped, or suspended on a tree branch or wall through the X frame (figure 2.5).

Figure 2.5 Solar panel set testing session

To be updated: Speaker transducer and output amplification system.

To be updated: SSH over different ISP (i.e. mobile broadband and fixed-line broadband).

Part 3: Brewing

To be updated: Tingkap Nada Design and artistic concept.

Figure 3.1 Traditional Malay (upper) and English (bottom) house window design

References:

Acoustic Commons Creative Technical Workshop #2 (2021, January 29).

http://acousticommons.net/events/ctw.html

Darkice. (2021, February 09). Darkice. Home. http://darkice.org/

Freire, A. M. (2008). Remediating radio: Audio streaming, music recommendation and the discourse of

radioness. Radio Journal: International Studies in Broadcast & Audio Media, 5(2-3), 97-112.

Gelman, A. D., Halfin, S., & Willinger, W. (1991, December). On buffer requirements for store-and-forward video

on demand service circuits. In IEEE Global Telecommunications Conference GLOBECOM'91: Countdown to the New Millennium. Conference Record (pp. 976-980). IEEE.

Grant. K. (2020, May 16). The Future Of Music Streaming: How COVID-19 Has Amplified Emerging Forms Of

Music Consumption. https://www.forbes.com/sites/kristinwestcottgrant/2020/05/16/the-future-of-music-streaming-how-covid-19-has-amplified-emerging-forms-of-music-consumption/?sh=1103e7a1444a

IceCast. (2021, February 09). Icecast. Home. https://www.icecast.org/

IQaudio Limited. (2020). IQaudio Product Guide (version 32). Retrieved

from https://datasheets.raspberrypi.org/iqaudio/iqaudio-product-brief.pdf

Lee, J. (2005). Scalable continuous media streaming systems: Architecture, design, analysis and

implementation. John Wiley & Sons.

Locus Sonus. (2021, January 30). About. https://locusonus.org/wiki/index.php?page=About.en

Locus Sonus. (2021, January 30). Locustream. https://locusonus.org/wiki/index.php?page=Locustream.en

Locus Sonus .(2021, January 30). Hardware. Streambox. http://locusonus.org/streambox/

Matuschek, D. (2014 July 20). Sound Quality of the Raspberry Pi B. http://www.crazy-

audio.com/2014/07/sound-quality-of-the-raspberry-pi-b/

MDN Web Docs. (2021, February 03). Web Technology for Developer. Live Streaming Web Audio and Video.

MDN Web Docs. (2021, February 03). Web. Web Audio API.

https://developer.mozilla.org/en-US/docs/Web/API/Web_Audio_API

Patrick, D. R., & Fardo, S. W. (2008). Electricity and electronics fundamentals (2nd ed.). Fairmont Press.

Retrieved from https://ebookcentral.proquest.com/lib/bristol/detail.action?docID=3239060

Pi Hut (2017 February 21) Raspberry Pi Round Up. Raspberry Pi Comparison Table.

https://thepihut.com/blogs/raspberry-pi-roundup/raspberry-pi-comparison-table

Reason, Samuel. (2020. November 06). Music Streaming Actually Existed Back In 1890.

https://blitzlift.com/music-streaming-actually-existed-back-in-1890/

Sinclair, P. (2018, August). Locus Stream Open Microphone Project. In 2018 ICMC Preserve| Engage|

Advance.

Upton, E., Duntemann, J., Roberts, R., Mamtora, T., & Everard, B. (2016). Learning computer

architecture with Raspberry Pi. John Wiley & Sons.

Ylonen, T. (1996, July). SSH–secure login connections over the Internet. In Proceedings of the 6th USENIX

Security Symposium (Vol. 37).via SSH. (2021. February 03) SSH. Protocol.

https://www.ssh.com/ssh/protocol/

Webopedia. (2021, February 03) Reference. Server.

https://www.webopedia.com/reference/servers/

Wollman, D. (2020 , October 08). Primo EM 272 & EM 264Y Lavalier Microphones | Began with AOM-5024L-HD-R | DIY. Retreived from https://www.youtube.com/watch?v=ZAzL0G7iFGY

The Shadow

Sfera Nada: Off-grid Live Audio Streamer Device

Keywords: acoustic ecology, soundscape, off-grid, Locus Sonus, location sound, sound field

The objective of this artistic research project is to develop an off-grid 24-hours live location sound streamer device known as Sfera Nada derived from Tingkap Nada installation project. This project serves to sustain and support the aim of the artistic research theme study - Nada Bumi in which is to re-imagine Malay cultural identity with the accentuation of biophony and geophony materials through sound art practice for future reference and development. Sfera Nada is a self-sustained off-grid unit, durable waterproof device, and 'versatile' location audio recording system equipped with a binaural electret microphone array, and multi-audio inputs for external sound transducers such as Omni-hydrophone or geophone. Sfera Nada can be deployed and installed by either hanging, floating, or sticking on various medium surfaces with add-ons hardware. The device is fabricated through rapid prototyping technology, 3-D printing which enables easy ensembling, maintenance, customisation, and part replacement.

Figure 1.0 Sfere Nada design concept via Computer-Aided Design (CAD)

Figure 2.0 Sfere Nada Docking System: Pi Zero (W) and IQAudio Codec Zero sound card HAT input output for Binaural Array and omni-directional hydrophone.

Figure 2.1 IQAudio Codec Zero input-output options and wiring diagram for aux stereo inputs at 1 Vrms (IQAudio Product Guide PDF)

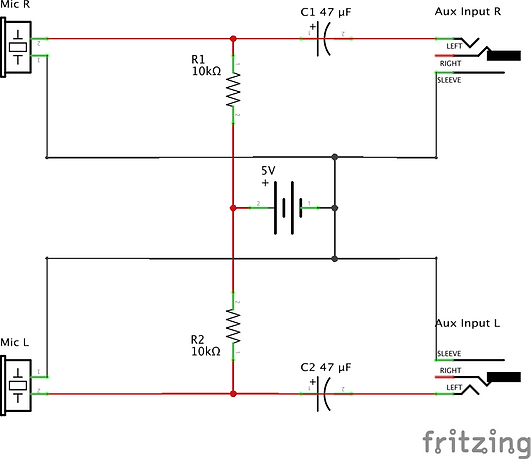

A matched pair Primo EM272 Electret Microphone is used for Nada Sfera's Stereo or Binaural array soundfield (Figure 3.1 & 3.2). The microphones requires bias voltage of 5 V with 0.5 mA (0.000005A), in line with the recommended microphones manufacture's specification. Following the ohm's Law, V = IR, each microphones is attached through its bias voltage circuits with a 10k Ohm (R) as load Resistor (Metal Film) to produce 0.5 mA current (I) from the 5 V voltage (V) source.

Figure 3.0 Manufacture's specification for Primo EM272 Electret Condenser Microphone

Figure 3.1 Matched pair Primo EM272 Electret Condenser Microphone prepared for Binaural/ Stereo rig.

In addition, a 47 μF Capacitor (Electrolytic) is added in parallel from V+ microphone and V+ resistor-voltage source to block Direct Current (DC) signal to pass and feed into Aux V+ input; only Alternating Current (AC) is required as an audio signal (3.1 & 3.2) The Direct Current is recognised of having 0 Hertz (Hz) frequency and frequency nearest to the 0 - 3 Hz need to be filtered out to achieve AC output only. Therefore from Capacitance Reactance formula, Xc = 1/ (2π..f.C) where by, Xc is the capacitance reactance (load resistance) in Ohm, f is the frequency cut off (high-pass limit) in Hertz and C is the Capacitance value of the capacitor in Farad. Hence, by using 47 μF Capacitor as DC-block filter and 10k Ohm load resistor, we will get the nearest 0 Hz cut-off high pass filter at 0.339 Hz.

Figure 3.2 Testing out the two sets of bias voltage with DC block circuit for each matched-pair Primo EM272 Electret Condenser Microphone Stereo/Binaural sound field array rig (using only 2.87V supply).

Figure 3.3 Circuit diagram for bias voltage with DC block circuit in Figure 3.2 setup; Left microphone diagram derrived from the mirror image of Right microphone diagram.

However, before attaching the microphones to the audio streamer module (Pi Zero and IQAudio Codec Zero) a noticeable 3dB hissing tape-like noise was present when using an external power bank. Hence, an RC filter is added through the power bank output to counter this issue (Figure 3.3). During the live audio streaming test session, a static Radio Frequency (RF) or Electromagnetic (EM) interference (morse code-like beeping) was observed, and therefore, better shielding and ground are needed for the system (Audio 3.0)

Audio 3.0 Stereo sound field excerpt of live audio streaming test session with EMI beeping and hissing background noise.

Figure 3.4 With soldered microphones bias voltage and DC block circuits on prototype circuit board. Ferrite beats are used to filter out clicking DC noise from the power source line (including battery power source). The sphere binaural shell of Nada Sfera will be insulated with copper or aluminium foil for RF interference blocker.

.

Figure 4.0 Sfere Nada 3-D printed top shell post-processing for waterproof treatment.

To be updated: waterproofing approach.

Figure 5.0 Sfere Nada 3-D docking slot for the bottom shell.

To be updated: module docking system, solar power system, omni-directional hydrophone. Overall cost breakdowns and budgeting details (update: 120GBP (inc.tax!!!!!! &#$^ and postal/shipping charges from China :/)).

The Shadow

Gerak Nada: Interactive 3-D Motion Sound Event

Keywords: Audio Spatial, Algorithm, Digital Signal Processing, Sensor, Movement, Mak Yong

The objective of this artistic research project is to translate Mak Yong traditional Malay dance-theater movements into sonic gestures and spatialisation through motion capture or mo-cap technology which will be applied in the electroacoustic composing process and stage performance for Memoir of Malay Rebab work series and The Seed of Live work series. This project's 'unique' output will serve to support the aim of the artistic research theme study - Nada Bumi in which is to re-imagine Malay cultural identity with the accentuation of biophony and geophony materials through sound art practice. Various motion sensor technology such as optical, acoustics, and non-visible electromagnetic-based sensor detectors will be studied along with the application of microcontroller and software interfaces such as open-source integrated visual-audio programming Pure Data, Processing, Ableton, MaxMSP, and other third party-plugins just to list a few, to fill in the gap of Nada Bumi artistic outputs. The technological solution developed from this project may be explored further for other artistic output such as sound installation, arts therapies or rehabilitation, arts learning aid, and more.

Part 1: Motion Capture ~ Mocap

Motion capture is also known as mocap or mo-cap is a process of recording the movement and gesture of objects, particularly human body motion within a space of two-dimensional (2-D) x-y or three-dimensional (3-D) x-y-z displacement. This technology is used to retrieve continuous motion data from motion sensor detectors for archival, analysis, system feedback, and synthesis such as animation reconstruction, which can be seen in filmmaking, video game development, medical applications, and robotics, just to list a few (Rahul, M. 2018). Extensive mocap applications can be observed in the filmmaking and video game development industry, whereby the technology is available as a human interactive device and real-time motion sensing input device for consumers at an affordable price such as Kinect Xbox by Microsoft, LiDAR iPhone 12, and Oculus Rift VR motion sensor, just to list a few. The current mocap technology uses two clusters of motion sensor detectors technology for the motion-sensing input device which are optical motion capture technology and non-optical motion capture technology (Table 1) in wearable and non-wearable form (Menolotto, M. et. al. 2020). The sensors detect events or environmental changes in mechanical wave spectrum and electromagnetic wave spectrum within the sensor’s coverage and sensitivity.

For example, the Kinect Xbox, a non-wearable mocap device uses a near-field Infrared light (IR) projector and two optoelectronic sensor detectors in which it detects two types of electromagnetic (EM) wavefield changes; visible light spectrum (white light) and Infrared light spectrum, caused by the human motions. The sensor converts those wave field changes into variable electrical energy signals and translates them into digital signals for computational thinking processes such as ‘gray-scale depth map’ and ‘spherical trigonometry’ algorithm, just to list a few to produce the 3-D object displacement data. The IR projector works like a radar, known as 'time-of-flight’ where the IR projector ‘zap(s)’ the infrared light towards a surface, and the 'scattered' infrared light reflection then detected by the infrared optoelectronic sensor detector in its ‘field view’ coverage pixel-by-pixel. The time is taken for the infrared wave to travel between the IR projector towards the object surface and bounce-off or reflect the 'deformed' infrared light from the object surface towards the sensor detector is calculated to determine the object’s depth distance. The depth data, Z-coordinate (Z) is then further processed by combining the height, Y-coordinate (Y) and width, X-coordinate (X) data from white light spectrum changes received from the RGB sensor detector through a complex 3-D model algorithm to recognised human shape and generate its 3-D surfaces (Sarbolandi, H. et al. 2015).

Nevertheless, selected X-Y-Z motion displacement coordinates data of human body movement will be emphasized and synthesised for this research project for sonic morphing and sonic spatio-temporal events, rather than full-scale human body motion displacement translation for 3-D visual animation. However, motion capture techniques and systems based on two clusters of motion sensor detectors technology will be discussed further to determine the suitable mocap system for this research project within four constraints; 1) efficiency 2) accuracy; 3) accessibility, and; 3) cost.

Part 2: Software Interface

Interfacing and communicating between two computer-based electronic devices for user applications (apps) such as computer software and a microcontroller unit (MCU) is possible through serial or parallel communication setting over either USB, Ethernet, Wi-Fi, Bluetooth, and radio, just to list a few and is done by programming both the microcontroller and computer to establish an identical communication protocol. For this artistic research project, an MCU is needed to send series of data variables to the computer software, Pure Data (Pd) or Max MSP for further processing, which will be used to manipulate the audio processing parameters applied in Nada Bumi sound arts.

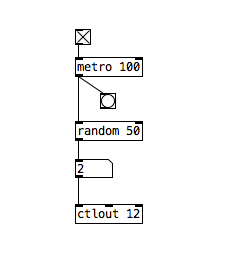

In figure 2.1, a pilot serial communication between Arduino MCU to Pure Data via USB and Bluetooth communication method is explored via [comport] Pd object to send and received binary data in sequences of 8-bit packages, bytes (FLOSS Manuals, Peach, M. 2015). The [comport] object, outputs a series of strings containing both 'constant' and 'variable' decimal data between 0 to 255, which need to be processed further in Pd. This is done by filtering those data strings using the [select] object to pass through the useful strings list of variable data values (figure 2.2) and recompile the selected strings list with variable data values into messages list by using the [repack] object or other alternative objects such as the [Zl group] object and then, translated the massages list into meaningful human-understandable alphanumerical text messages, characters (char) based on American Standard Code for Information Interchange (ASCII) through the 'parsing' method in Pd; list to symbol and integer (Konopka, C. 2018, Musil, T. 2005 et al.). In Pure Data version Pd 0.51-3, published on 20th November 2020, after downloading the external packages via Pd Deken library manager at Help menu; Find external, certain downloaded Pd external objects can only be used by declaring the external objects library by using a [declare] object on each Pd patch window such as [declare -lib iemlib]

Figure 2.1. Basic Pd patch design for receiving serial data from Arduino microcontroller inputs, received into [comport] object and output the decimal data through repacking and unpacking the data strings into single decimal data output.

Figure 2.2. Identifying 'constant' decimal value strings (13 10) and 'variable' decimal value strings output by [repack] object received from Serial.write to [comport] object in Pd window monitor.

Figure 2.2. Basic Pure Data patch design for transmitting

variables as MIDI message to other MIDI input.

Figure 2.3. Independent 'primitive' XY point visualiser (without mouse drag point feedback) via canvas for serial input and MIDI output, an alternative for [grid] external object by Yves Degoyon (Unauthorized Pd external library)

Figure 2.4. 3-D displacement visualisation of the 'sound-object' (red sphere) around a Malay Rebab model in GEM Pure Data. Further possible visualization rendering via Processing.

Figure 2.5. 'Sound-object' x,y,z displacement variables send into 'Sound-object' 3D displacement visualisation GEM Pure Data patch as in Figure 4.

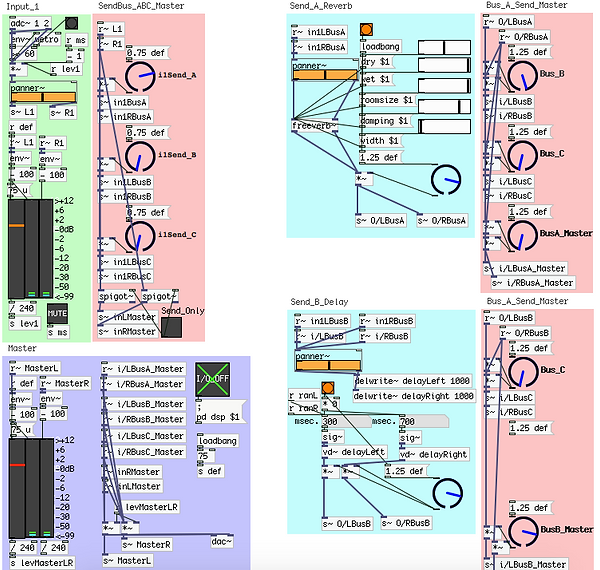

Figure 2.6 Stand-alone live audio mixing bus controller and processing for open-source platform, Pure Data without rewiring to other DAW for instance Ableton via virtual audio bus such as Soundflower and virtual MIDI bus such as IAC.

Video Demo 2.1 Stand-alone live audio mixing bus controller and processing for open-source platform, Pure Data without rewiring to other DAW for instance Ableton via virtual audio bus such as Soundflower and virtual MIDI bus such as IAC.

To be updated:

Draft

Video tracking pure data. (optical sensor) - Kinect? (coverage?)

GPU+CPU? heavy... openCV? live stream process stability - crash?

Part 3: Hardware Interface

A pilot test for interfacing between a motion capture sensor detector and Pure Data is done by using an acoustic-based sensor detector; an ultrasound transducer sensor module, HC-SR04 (Figure 3.1). The sensor module is connected to a microcontroller (MCU); Arduino UNO to control the sensor module behavior on receiving the digital input-output data to the computer. The sensor module behavior can be programmed into the microcontroller through Arduino Integrated Development Environment (IDE) software such as Arduino IDE. The binary data (1-0; true-false; on-off) communication between the microcontroller and computer is done by converting the MCU Transistor-Transistor Logic (TTL; Vcc-0V; high-low) level via the MCU's onboard Universal Asynchronous Receiver/Transmitter (UART) to USB serial communication protocol to connect with the computer USB serial port either over the USB cable, Bluetooth, WiFi and radio, just to list a few. The sensor module IDE with explanations as in Figure 3.2 below. The Serial.write data will be transferred into Pure Data [comport] object as described in Figure 2.1. However, Serial.print can be used as debugging friendly since it uses 'chars' as human-readable alphanumerical text messages to transfer the data into Pd [comport] (Drymonitis, A. 2018).

Figure 3.1. Pilot motion sensor test with an Arduino Uno MCU and HC-SR04 Ultrasound transducer sensor module; Echo at digital pin 9 and Trigger at digital pin 10

Figure 3.2. Arduino IDE codes for Arduino UNO microcontroller to control the ultrasound transducer sensor module input-output behaviour.

To be updated:

IMU sensor, 6 degrees of freedom (Euclidean space)

Part 4: User Interface

To be updated:

Draft

Interfacing Arduino MCU with PD via external wireless BLE (Bluetooth Low-Energy) serial communication (alternatively a compact ESP32 MCU via build-in Bluetooth & wifi serial communication can be used which compatible with Arduino IDE but check transmission range coverage).

putting together and other possibilities;

remote serial communication: BLE types and coverage.

portable power supply.

wearable ergonomics and safety.

Figure 4.1. Wireless serial communication test and transmission signal coverage distance measurement with an Arduino Uno MCU and AT-09 BLE 4.0 module.

References:

Albakri, I. F., Wafiy, N., Suaib, N. M., Rahim, M. S. M., & Yu, H. (2020). 3D Keyframe Motion Extraction

from Zapin Traditional Dance Videos. In Computational Science and Technology (pp. 65-74). Springer, Singapore.

Bevilacqua, F., Naugle, L., & Dobrian, C. (2001, April). Music control from 3D motion capture of dance. In

CHI2001 for the NIME workshop.

Chung, B. W. (2013). Multimedia Programming with Pure Data: A comprehensive guide for digital artists

for creating rich interactive multimedia applications using Pure Data. Packt Publishing Ltd.

Drymonitis, A. (2014). Code, Arduino for Pd'ers. Retrieved from http://drymonitis.me/code/

FLOSS manuals. Pduino. Retrieved from http://write.flossmanuals.net/pure-data/pduino/

Goebl, W., & Palmer, C. (2013). Temporal control and hand movement efficiency in skilled music

performance. PloS one, 8(1), e50901.

Kreidler, J. (2009). Programming electronic music in Pd. Programming Electronic Music in Pd.

Konopka, C. (2018). Arduivis. Retrieved from https://github.com/cskonopka

Menolotto, M., Komaris, D. S., Tedesco, S., O’Flynn, B., & Walsh, M. (2020). Motion Capture Technology

in Industrial Applications: A Systematic Review. Sensors, 20(19), 5687.

Musil, T. (2005). iem_anything-help.pd. iem-anything object Pure Data external package library.

Retrieved from https://puredata.info/downloads/pd-iem/view

Mustaffa, N., & Idris, M. Z. (2020). Analysing Step Patterns on the Malaysian Folk Dance Zapin Lenga.

Journal of Computational and Theoretical Nanoscience, 17(2), 1503-1510.

Patrick, D. R., & Fardo, S. W. (2008). Electricity and electronics fundamentals (2nd ed.). Fairmont Press.

Retrieved from https://ebookcentral.proquest.com/lib/bristol/detail.action?docID=3239060

Peach, M. (2010). comport-help.pd. comport object Pure Data external package library. Retrieved

from https://puredata.info/downloads/comport

Rahul, M. (2018). Review on motion capture technology. Global Journal of Computer Science and

Technology.

Rumsey, F., & McCormick, T. (2014). Sound and recording : applications and theory (7th ed.). Focal

Press. Retrieved from https://ebookcentral.proquest.com/lib/bristol/detail.action?docID=1638630

Sarbolandi, H., Lefloch, D., & Kolb, A. (2015). Kinect range sensing: Structured-light versus Time-of-

Flight Kinect. Computer vision and image understanding, 139, 1-20.